ChatGPT’s Privacy Issues: What’s Going On and Why It Matters for Schools Like You

You’ve probably heard about ChatGPT, an AI chatbot that recently got into some hot water. Italy has temporarily banned it, and other countries are investigating how it handles user data. So, what does this mean for you, your staff and your students when using AI in school?

The issue with how ChatGPT collects data

The main problem is how OpenAI, the company behind ChatGPT, collects data to train its AI models. OpenAI’s GPT-2 model used 40 GB of text, while GPT-3, the one ChatGPT is based on, used a whopping 570 GB of data!

ChatGPT learns and develops its model by using a process called “training,” which analyses vast amounts of text data to understand language patterns, context, and relationships between words.

For example, imagine a school project where students are asked to write an essay on climate change.

- ChatGPT would have been trained on numerous articles, books, and discussions related to climate change from various sources. This way, it can generate relevant and (sometimes) accurate responses when users ask questions or seek assistance.

- By continuously learning from new data and user interactions, ChatGPT refines its understanding, allowing it to provide better answers and suggestions over time.

- This dynamic learning process enables AI tools like ChatGPT to become increasingly helpful resources for students and educators.

The Post-Training Phase: ChatGPT’s Privacy Issues

When people chat with ChatGPT, they often share information like their interests, opinions, or experiences. This data helps the AI system better understand human language, context, and personal preferences.

By learning from a diverse range of users and their unique perspectives, ChatGPT adapts and generates responses more tailored to each user’s specific needs and interests.

However, it’s important to note that using personal data raises concerns about user privacy and data protection.

European Data Protection Regulators are taking notice

This massive data collection is now causing OpenAI some headaches. Data protection authorities from several countries are looking into how OpenAI collects and processes data for ChatGPT. They’re worried that the company might have taken personal info like names or email addresses without asking for permission or having appropriate privacy safeguards in place.

The Italian Data Protection Authority claims that OpenAI isn’t clear about how it collects user data during the post-training phase, like when you chat with ChatGPT. In fact, Italy has already blocked ChatGPT, and other countries like France, Germany, Ireland, and Canada are also checking it out. The European Data Protection Board is even creating a task force to look into ChatGPT across the EU.

The main issues facing OpenAI include the following:

- No age filter for minors: The absence of an age filter in ChatGPT exposes minors to potentially inappropriate content, which is another issue raised by the Italian privacy regulator.

- Lack of transparency: Italy’s privacy regulator believes OpenAI hasn’t provided enough information about how ChatGPT collects and processes user data during the training phase and user interactions.

- Inadequate legal basis: The regulator claims that OpenAI lacks a solid legal basis for the massive collection and storage of personal data used to train ChatGPT’s algorithms.

- Inaccurate data processing: Concerns have been raised about the accuracy of the information provided by ChatGPT, leading to the potential incorrect processing of personal data.

What this means for schools globally

Regulatory oversight is only starting. As a school leader, you should stay informed about the latest developments in AI and their potential impact on education.

Italy’s recent concerns with ChatGPT highlight the importance of understanding data privacy issues and ensuring that the AI tools used in your school prioritise user protection and maintain compliance with data protection regulations. To safeguard your students and staff, consider the following actions:

- Evaluate AI tools carefully: Assess AI vendors’ data privacy policies and practices before implementing their products in your school.

- Educate the school community: Provide training for teachers, students, and staff on responsible AI use and the importance of data privacy.

- Monitor usage and compliance: Regularly review the AI tools used in your school to ensure they remain compliant with privacy regulations and adapt to evolving legal frameworks.

- Foster open dialogue: Encourage open discussions among educators, students, and parents about AI’s role in education, its benefits, and potential risks, fostering a community that is well-informed on privacy concerns.

- Establish Clear Data Privacy Policies: Develop comprehensive data privacy policies that outline how personal information will be collected, stored, and used. Ensure that these policies are transparent and accessible to students and their families. Clearly set out the circumstances in which AI will be used and if or when personal data will be used with these tools.

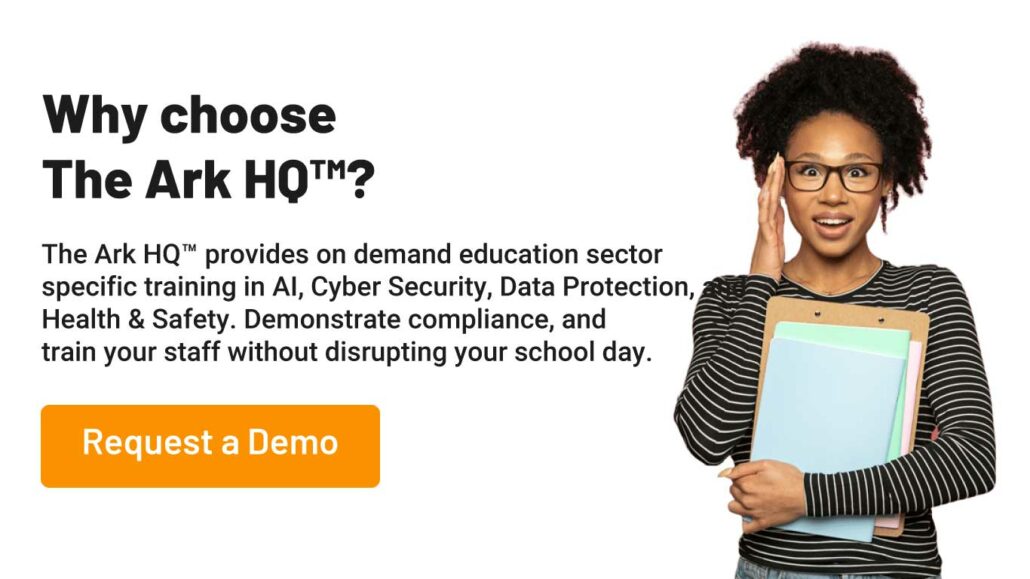

Training on AI

To help school leaders navigate the complex landscape of ChatGPT’s Privacy Issues while ensuring student privacy, our Education AI™ training course provides comprehensive training on best practices, ethical considerations, and practical implementation strategies.

Don’t let privacy concerns hold your school back from leveraging the transformative potential of AI in education. Enrol your staff in our Education AI™today and take the first step towards creating a safe and responsible AI-driven learning environment for your staff and students.